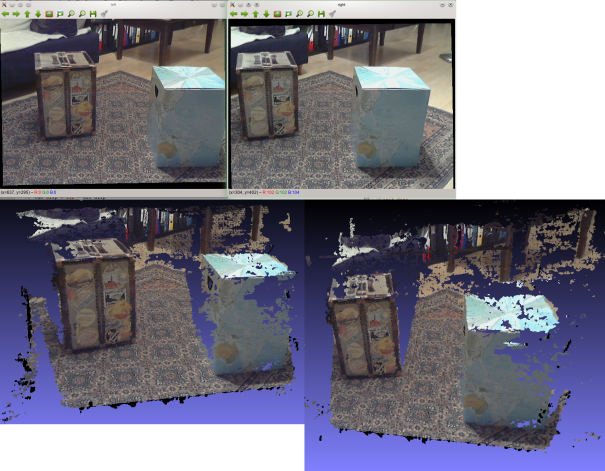

In my last posts, I showed you how to build a stereo camera, calibrate it and tune a block matching algorithm to produce disparity maps. The code is written in Python in order to make it easy to understand and extend, and the total costs for the project are under 50€. In this post, I’ll show you how to use your calibrated stereo camera and block matcher to produce 3D point clouds from stereo image pairs, like this:

Two images taken with a calibrated stereo camera pair, with two perspectives of the resultant point cloud.

Short and sweet: How to make a point cloud without worrying too much about the details

The entire workflow for producing 3D point clouds from stereo images is doable with my StereoVision package, which youcan install from PyPI with:

pip install StereoVision

So let’s get straight to the good stuff: How to produce and visualize point clouds. Afterwards I’ll explain the code behind it and how it’s changed since the last posts, so that you’ll know whether you need to change anything on your end before you can start producing your point clouds.

One utility that comes with the package, images_to_pointcloud, that takes two images captured with a calibrated stereo camera pair and uses them to produce colored point clouds, exporting them as a PLY file that can be viewed in MeshLab. You can see an example at the top of the page.

If you’ve built your stereo camera and calibrated it, you’re ready to go. You can call it like this:

me@localhost:~> images_to_pointcloud --help

usage: images_to_pointcloud [-h] [--use_stereobm] [--bm_settings BM_SETTINGS]

calibration left right output

Read images taken with stereo pair and use them to produce 3D point clouds

that can be viewed with MeshLab.

positional arguments:

calibration Path to calibration folder.

left Path to left image

right Path to right image

output Path to output file.

optional arguments:

-h, --help show this help message and exit

--use_stereobm Use StereoBM rather than StereoSGBM block matcher.

--bm_settings BM_SETTINGS

Path to block matcher's settings.

On my machine this takes about 2.3 seconds to complete on two images with dimensions 640×480. 1.89 seconds is for writing the output file – disk access is just about the most expensive thing you can do. That means that I need about 0.4 seconds to read two pictures, create a 3D model of what they saw, do some very rudimentary filtering to eliminate the most obviously irrelevant points. That’s pretty good, if you ask me.

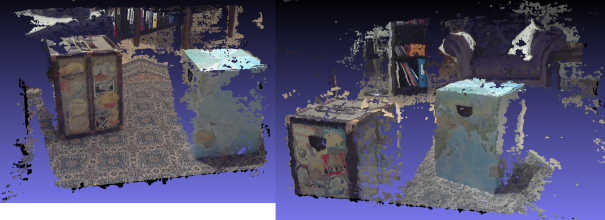

Here are some example results:

If you’re looking for an easy way to take pictures, you can use show_webcams to view the stereo webcam views and, if desired, save pictures in specified intervals.

As with any passive stereo camera setup, you’ll have the best results with a texture-rich background. If your results are bad, try tuning your block matching algorithm and make sure that you’ve told the programs the correct device number for the left and right camera – otherwise you might be trying to work crosseyed, and that won’t work well at all.

So what’s changed in the sources?

The bottom line: If you’ve already calibrated your camera, you’ll need to modify your calibration or do it again. Also, you won’t be able to work with the StereoBMTuner for at least a few days to tweak your block matching algorithm. This is only a problem if you have a special setup – otherwise the default settings should work fine now.

If you’ve followed the previous posts, you’ll have built and calibrated your stereo camera and perhaps started producing disparity maps. If you were working with the old repository, you might have been producing bad calibrations. Since then there have been a few changes to core parts of the code in the calibration program, calibrate_stereo.py, which means that you’ll need to update your old calibration. The reason for this is that I was getting bad point clouds using the disparity-to-depth map produced by OpenCV’s stereoRectify function. I’ve replaced the matrix with one based off of the stereo_match.py sample in the OpenCV sources that examines the images you provide and makes some assumptions about the camera’s focal length. It should work fine for cameras with focal lengths similar to the human eye, which most webcams are. You can either use calibrate_cameras from the new package to recalibrate your camera or just correct your own calibration by loading the StereoCalibration, looking at the blame for the old calibrate_stereo.py, replacing your calibration’s disparity-to-depth map with the new one that’s produced in StereoCalibrator.calibrate_cameras and then using the calibration’s export function to save it back to disk.

As much as I dislike inferring camera properties from the image’s metadata, this should work fine for most examples and is accurate enough for my purposes. The matrix returned by OpenCV just wasn’t doing what it needed to and the fix was easy and fairly robust for the use cases that I foresee for the library.

Another change is that the StereoBMTuner, which you might have read about in the last post, has changed quite a bit, as I explain in my next post. I had nice experience with it and was able to produce some okay-looking disparity maps, but in general, I was so unsatisfied with the resultant point clouds that I started working with OpenCV’s StereoSGBM. It’s another block matching algorithm that also works quite well, and I discovered that my camera pair was able to work just fine with standard settings that I harvested from one of the OpenCV sample scripts. The new tuner class now allows tuning both types of block matchers by analyzing the block matcher and creating methods on-the-fly to pair with sliders for the correct parameters – a lot of fun to program.

So how does it work?

We’ve been working thus far with a CalibratedPair class, which is an interface to the two cameras in your stereo rig. It inherits from the StereoPair class, with the major difference that it takes a StereoCalibration object that it uses to deliver stereo rectified images rather than the raw images taken with your cameras. This class used to directly hold a block matching object from OpenCV, restricting access to its parameters and taking care of all of its details. The complexity of dealing with the block matcher has now been externalized into a group of three classes.

These classes are an abstract class, BlockMatcher, which implements all the functionality of both BlockMatchers I’ve worked with in OpenCV so far through a unified API and defines the remaining interface that each class has to implement itself. That means that a CalibratedPair doesn’t actually need to know what kind of BlockMatcher you’re using – it delegates work to its BlockMatcher and lets the BlockMatcher take care of the details itself.

There are two BlockMatcher subclasses: StereoBM and StereoSGBM. Each BlockMatcher does certain things – produce disparity maps, for example, or produce point clouds. It uses a block matching object from the OpenCV library and protects the parameters that are relevant for that block matcher, preventing the user from setting ones that will result in errors. It’s up to the user to make sure that the settings he uses also make sense.

StereoPair now interacts with the BlockMatcher of your choice and gives you a PointCloud back. PointCloud is a new class that holds the coordinates of the points produced by the block matching algorithm paired with the colors stored in the image at that location. The class implements one very basic filter – removing the coordinates detected at infinity, where the block matcher wasn’t able to find a match. It can also export itself to a PLY file so you can view the point cloud in full color in MeshLab.

So now you know how to use the code yourself. I hope that the design is understandable enough that it’s easy for you to implement your own programs using it.

I will probably implement some more robust filtering at a later point in time, but for the moment I’m happy with these results. Hope you are too! If you’re using these programs to make point clouds yourself, I’d love to know what you’re doing with them and what your experience is.

Thanks for this post! I’ve been using OpenCV 3.0 this whole time, converting the setup/calibration code to 3.0 syntax but this version relies heavily on StereoBM/StereoSGBM so I couldn’t convert it manually… which means I installed 2.4.9 on another machine and ran your code which works well. Personally I am looking for depth maps rather than point clouds for robotic applications, but I enjoy your posts very much. I still have yet to learn/find a good way to get an accurate depth map in OpenCV 2.x or 3.0. Thanks again for your work!

LikeLike

I’m glad it helps you! Your work sounds interesting, what kinds of things do you work on?

As far as accuracy is concerned, that is difficult – you always have a bit of noise in the point cloud. PCL has some nice functions for filtering out statistical outliers, which might be a good starting point – I was thinking about using that as a method of the PointCloud class.

If you’re satisfied with the quality of your point clouds, though the step isn’t far from a depth map. I’m also ultimately mostly interested in depth maps and will be posting on that in the near future.

LikeLike

I’m working on a simulated Mars rover so we can’t use ultrasonic/magnetic sensors – pretty much just cameras. Simple obstacle detection is my first concern but the ultimate goal is being able to identify samples in a field and retrieve them completely autonomously.

Everything I work on deals with sensors and motion so I try to learn OpenCV with a lean toward data output so my robots can use coordinate/color/etc data to perform calculated motions and report environmental conditions to the ‘mission computer’. This seems different than most goals for OpenCV applications, I tend to see the vast majority of the programs producing image/video output for humans to interpret rather than a robot control program.

I still haven’t been able to produce a point cloud nearly as complete as yours. My cameras are 30cm apart, perhaps they should be closer together? Your setup looks like they’re closer than 30cm. Once the point cloud looks acceptable in MeshLab, I’ll need to figure out how to read the PLY contents to grab the data for the pixels that are closest to the camera – I bet this will not be easy!

Have you looked at the calibrate and stereo-match samples that come with OpenCV? I wonder why the tutorials and sample code use stereo functions without the calibration data? One reason I like your posts is because you saved the calibration data and used it later.

LikeLike

That sounds like tons of fun, pretty much anything to do with space gets me excited.

It sounds like we’re both doing fairly similar tasks. I use visual output to evaluate whether the algorithm is working correctly, but I’m also more interested in using the output in an automated fashion.

As far as your cameras are concerned, the distance between them is determined empirically during calibration, so in theory it doesn’t matter that much. However, you do want the distance to make sense. It’s a tradeoff game. When your cameras are farther apart you have more disparity so you have a higher depth resolution. On the other side, the farther apart the cameras are, the less overlap you have in image pairs, so you can’t get as much information out of a single image. As far as I can tell, many people use a distance of about 10 cm, which corresponds roughly to the distance between your eyes. Since my brain uses stereo vision for depth perception, I stuck with that as well.

Otherwise remember that stereo vision works with point matching, so you’ll get the best results on richly textured surfaces.

I wouldn’t work with the PLY files directly if I were you – it doesn’t make much sense to write to your hard drive just to read from the hard drive immediately. Keep data in your RAM as much as possible. If you take a look at the PointCloud class, you’ll see that it’s pretty simple and the points should be easy to address from the class. It’s also fairly compatible with PCL (hint: work will come soon in that area). I’d try working with it directly in your case so you have near real time reactions.

LikeLike

Ehi, if anyone still looking for that filtering step (very useful in some cases, check out my code here: https://alvisememo.wordpress.com/2016/02/14/statistical-outlier-removal-with-opencv-and-flann-w-code/

LikeLike

Thanks!

LikeLiked by 1 person

Nice!

LikeLike

[…] this is finished, you can start producing 3d point clouds immediately, but you might be unsatisfied with the results. You can get better results by tuning your block […]

LikeLike

Hi, Daniel:

I’ve successfully calibrated my cameras and have run: images_to_pointcloud –use_stereobm CalData left_image.bmp right_image.bmp data_out.ply

I have not yet fiddled with tuning the block matcher.

It produced a PLY file that I can open with MeshLab (v1.3.2). The image has a lot of data, and has many of the characteristics of the imput images (all good), but it is warped into a circle with two large empty ‘holes’ (weird). Should this be viewed another way? or exported into another format?

Thanks.

LikeLike

Hi Steve,

Viewing with MeshLab is the way I prefer to looking at the data. Of course you can use any representation you like, but I haven’t implemented anything other than the ability to work with the object in your own Python program if you’re using the library or, if you use the utility, exporting it as a PLY file. If it looks good and has some errors, there are a number of reasons that that could be going wrong – why don’t you take a look at the next few posts on tuning block matchers, etc., and watch the video where I show some examples of why things work out in certain circumstances and not in others for more information. There’s not a whole lot more I can add without seeing the data itself. Do expect that your results will improve if you have better disparity maps, which is the step in the middle that you’re missing right now.

You’re more than welcome to fork the library and implement another export if you’d prefer having the data in another format, or reformating it manually without using the library. I am very open to pull requests. Also, if you open an issue on GitHub with your wish I might get around to implementing it if I like the idea, but I can’t make any promises as to when.

-Daniel

LikeLike

Hi, Daniel:

Thanks – I did have better results after using the block matching tuner rather than stereobm. I need to learn to use the tune_blockmatcher app and its parameters to produce better disparity maps.

LikeLike

Glad to hear it!

LikeLike

Hi Daniel:

I’ve had some luck creating 3D image ply files with the areas of interest showing up decently in MeshLab. I would like to be able to identify the coordinates of the points of interest, but I’m not having much luck using MeshLab 1.3.2. Is there a way to select a particular vertex and identify it’s 3D coordinates?

Also, I’m curious about the ply file vertex coordinates scale; they seem to range from -64.0 to +64.0. Is this a normalized scale? Is there a way to relate these coordinates to the scale used with my calibration grid?

Thanks!

LikeLike

Hi Daniel:

I figured out how to identify particular points of interest in the resulting PLY file by color-coding them (prior to analysis) and interogating the color-code parameter values in the PLY file to find the {x,y,z} coordinates of those points.

However, I’m still trying to make sense of the {x,y,z} coordinate values in the PLY file. My grid parameters were input in centimeters, and the values shown in the PLY file are too small to be the actual distances from the cameras (using centimeters as the units).

I did notice that the calib.disp_to_depth_mat in the calibrate_cameras() function includes the image width, length and a pre-set focal length of 0.8*width (of image). Do these values define the scale of the output PLY file? I do know the actual focal length of the camera I have used, but I would need to better understand the width, length and imgae_size values.

Any thoughts you have on this matter would be greatly appreciated. Thanks.

LikeLike

Hi Steven,

Sorry that I’m not so quick with getting back to you at the moment – I’ve been or ally busy!

Calibrating the cameras using the constant 0.8*width is based on an example program in the OpenCV sources. My results working entirely with the output provided by the calibration functions were rather implausible values, with totally screwed topologies. I was very impressed with the example in the OpenCV sources and spent a lot of time trying to understand why it worked better before just trying using its fixed parameters on my own images as a rule of thumb. Because I was satisfied with the results, I decided to keep them and trust in them because they’re magical 😉

As far as the units are concerned, I’d suggest digging around in the OpenCV source documentation. In the API documentation I haven’t been able to find much about it and I still haven’t gotten around to trying to test it empirically. Have you found a scaling factor that makes sense for your ground truth data? If not, my first suggestion would be to examine the library sources. I’d look into it myself, since I’m really curious about this too, but in the next few weeks I’m traveling so much that there simply is no time left over for it. If you find anything, I’d be interested in the results!

Best,

Daniel

LikeLike

Oh, and just another note – the focal length should theoretically be determined by the calibration, so you should at least find an approximation of it if you export the calibration and look at the values there. Width and height are related to the pixel width and height if the image.

LikeLike

Hi, Daniel:

Thanks for the info. I’ll dig through OpenCV to see what I can find about scaling and focal length; I do know the manufacturer’s posted focal length of the cameras I used, but I need to figure out units, etc. I’ll post what I find here.

Cheers,

Steve

LikeLike

Hi, Daniel:

I did some digging and found useful information at this OpenCV discussion thread: http://answers.opencv.org/question/4379/from-3d-point-cloud-to-disparity-map/

The depth-to-disparity matrix, Q, has the following structure:

Q =| 1 0 0 -Cx |

| 0 1 0 -Cy |

| 0 0 0 f |

| 0 0 a b |

where the calculated terms are:

{Cx, Cy} = center of the image {x, y}

f = focal length

a = 1/Tx, where Tx is the distance between the two camera lens focal centers

b = (Cx – Cx’)/Tx (I think this compensates for misalighment

For a 3D point with coordinates (X’, Y’, Z’), the disparity (d) and its position on the disparity image (Ix and Iy) can be calculated as:

d = (f – Z’ * b ) / ( Z’ * a)

Ix = X’ * ( d * a + b ) + Cx

Iy = Y’ * ( d * a + b ) + Cy

Then

| Ix | | X |

Q. | Iy | = | Y |

| d | | Z |

| 1 | | W |

And the 3D coordinates {X’, Y’, Z’} = {X/W, Y/W, Z/W}

The tutorial depth-to-disparity matrix, Q, has the following structure:

Q =| 1 0 0 -0.5*image_width |

| 0 -1 0 +0.5*image_height |

| 0 0 0 -0.8*image_width |

| 0 0 1 0 |

Comparing the Q calculated brom the calibration and rectification process:

– The estimates of Cx = 0.5*image_width and Cy = 0.5*image_height (in pixels) are not to bad, but I found using the actual calculated magnitudes for these values seem to ‘tighten up’ the 3D image pixels, even though it’s visual center is somewhat offset.

– Setting the magnitude of the focal length, f, to 0.8*image_width is moderately close to the calculated Q value (same order of magnitude, with ~20% or so), but there is no rational basis for this value, and the 3D Z’ coordinate calculated with this value will not be correct

– However, using the value of f calculated from the rectification process takes a leap of faith, since it doesn’t seem to relate to a number associated with the lens (my 2.8cm focal length lens had a calculated value of 998.3; I have no idea what the units are)

– Row 2 and 3 of the tutorial Q matrix are the negative of the the values of the calculated Q matrix (note the signs); this is to account for rotating the y-axis 180 degrees

– The calculated 1/Tx value seemed to come quite close to the measured value, when the measured value was in the same units as were used for the calibration grid square size

– Setting 1/Tx to the value 1.0 removes the scale effect of the distance between the camera lenses, and throws-off the 3D X’ and Y’ coordinate values

– Setting the (Cx-Cx’)/Tx value to 0 may be a decent approximation, as the calculated value seems to be small

I ended up using the the following depth-to-disparity matrix, Q’, using calculated terms (magnitudes) where possible, and using the coordinate rotations (mult by -1) on rows 2 and 3 to get the image to behave nicely when viewed as a PLY file in MeshLab (otherwise an incomprehensible image, as you have mentioned). I tested the results against visual calibration images I did get very good results (magnitudes in {X’, Y’, Z’} are correct with repsect to the units used for calibration: centimeters)

Q’ =| 1 0 0 Q[0][3] |

| 0 -1 0 -Q[1][3] |

| 0 0 0 -Q[2][3] |

| 0 0 Q[3][2] Q[3][3] |

Caveat: I did need to improve my calibration which was done by removing image pairs from the pool until the average epipolar-line error dropped from 30 to 0.6 pixels for the set (with 1280×720 pixel images). The image removal process was the ‘brute force’ method of: removing an image, run calibration, restore if no change, leave removed if improved, repeat… It was not always obvious as to why an image would result in degrading the calibration, since it would pass through the cal and look decent in the images.

Hope this helps.

Cheers,

Steve

LikeLike

Hi Steve,

Sorry for getting back to you so late – this is some great research, thanks for digging that up! I’m traveling at the moment, which is why I needed so long getting back to you, but I’ll try out your solution and probably integrate it into the upstream code so everybody else can profit from it as well. Or would you be interested in modifying the code yourself and contributing it as a pull request?

Cheers,

Daniel

LikeLike

Hi Daniel,

I am working on a Robotic vision project which goes like this. A robotic arm gets to access the boxes moving on the conveyor and manipulate (Turn or move) them, while the box is moving, according to the instructions. Basically, My interest is to analyse the position and orientation of the boxes.

I started my work with a still image of a box captured from PMD IFM o3d200 sensors. I am trying 3d reconstruction with 2 pmd images taken from left and right side of a box. Further I would like to calculate its position and orientation. I am working on this with opencv. I would be really glad, if I would get any information to proceed further.

Thanks

Jyothi

LikeLike

Hi Jyothi,

Sorry for the late reply, I normally depend on the WordPress app and it apparently stopped sending me push notifications. I’ll look into it.

My advice to you would be not to rely on 3d sensing for obtaining such precise information. When you’re working in real time and are not just trying to e.g. notice obstacles, but manipulate objects with a manipulator arm, a point cloud isn’t really what you’re looking for. You’re looking for a complete model of the object you’re manipulating and that’s already in the area of object recognition.

If you’re doing something on an assembly line and you can control the packaging of the boxes, why not print a pattern onto the boxes’ sides? Then you could look for the pattern in the picture and compute a rigid transform to match its position you see. If you apply the same rigid transform to your modeled object you’ll know its position and the robot can use that to position its arm. This method would be more robust than stereo vision.

This is a fairly advanced project but OpenCV has the stuff to take care of you here. Unfortunately, at the moment I’m involved in another large project so I’ll have to leave it at that – take a look at pose recognition and rigid transforms and that should set you in the right direction. Take care!

Cheers,

Daniel

LikeLike

Hello Daniel, I am making a technical report about stereoscopy and I would like to have permission to use your point cloud images, since the report is going to be public.

LikeLike

Hi Eric, feel free. I’d be interested in reading the report when it’s finished. Could you send it to me?

LikeLike

Definatly, but…. since I’m from Brazil, it’s in Portuguese. Would you want it either way?

LikeLike

Hmm… Will there be an abstract or executive summary in English? That’s what I’d be most interested in. Portuguese is not my strong point 😉

LikeLike

well, I’m afraid not =/ (at least by now)

this is just a simple report to give an introduction to work in the stereoscopy lab at my university. The report is already done, I am just asking for permission to use images from other works. Still, compared to your work mine is not really that impressive, I’ve just started working in this field.

LikeLike

I’m sure your work is great, I thought you might have possibly been evaluating the use of my software or something similar. In that case If the work will only be in Portuguese I unfortunately can’t read it, but you’re very welcome to use whatever you like from my materials. Good luck!

LikeLike

I do use some of your codes, and they have helped me a lot! =D

Thank you ever so much!!

LikeLike

[…] Producing 3D point clouds with a stereo camera in OpenCV | Stackable […]

LikeLike

Nevermind. Please ignore this

LikeLike

hello daniel concerning the output of the PLY files? can i ask what unit are they in ?? my Z values seem strange? they are not the distance from the camera like i would expect.. are they scaled by a factor??. Please any advice on this would help

LikeLike

Are they all in a plane with a small depth? I’m getting the same thing. Basically, a flat plant with points scattered around. It looks correct but the depth of the point cloud is clearly wrong.

LikeLike

Do you know how to do with more than two images?

LikeLike

If you want to use more than one image, the family of algorithms you’re interested is called Structure from Motion.

LikeLike

[…] Producing a Point Cloud with Stereoscopic Camera Setup […]

LikeLike

Hi Daniel, could you please tell me if it is possible to calculate the distance in meters from the ply file?

LikeLike

Hi Dominik, your distances will be metric to your calibration target, i.e. your chessboards. It’s been a while since I touched this source code but I think if you dig around in the OpenCV docs for the relevant function calls that my code executes it should tell you what the numbers mean. From there you can scale the distance in the ply file to metric units.

LikeLike